Overcoming database bottlenecks: A Journey with ProxySQL

| published by | simha srivatsa |

|---|---|

| in blog | Instawork Engineering |

| original entry | Overcoming database bottlenecks: A Journey with ProxySQL |

Introduction

Startups grow fast, and scaling infrastructure to keep up can be challenging. As companies expand, efficiency, scalability, and reliability become crucial for smooth operations and meeting market demands. This blog post will discuss how ProxySQL, an advanced MySQL proxy server, helped us manage our SQL traffic and connections as we scaled.

The Problem

Instawork faced a critical growth stage two years ago that began to strain our infrastructure. As our traffic peaked, it led to too many MySQL connections and frequent alerts because our Django app created a new database connection for every HTTP request.

MySQL’s blocking I/O and thread-per-connection model became a bottleneck as connections increased, making a strong connection pooling mechanism essential.

We had a primary database and multiple replicas, but without load balancing, traffic was uneven and resources were underused.

Choosing the Right SQL Traffic Manager: Why ProxySQL Stood Out

To solve our immediate problems, the most important features we cared about were connection pooling and load balancing. Any additional features were a bonus. We evaluated a few options that could meet our requirements against the list of features.

After careful consideration, ProxySQL stood out as the best option. It gave us what we wanted but had other features like query routing, caching and transaction support. We realised that transaction awareness is also a critical feature for an SQL proxy. All queries inside a transaction block must execute on the primary database, which ProxySQL gave us out of the box. Additionally, it had good community support to assist us when needed, making it an obvious choice

Choosing the Right Deployment method

The next step was to integrate ProxySQL into our stack which uses the AWS Elastic Container Service (ECS).

We evaluated a few deployment options for ProxySQL within ECS:

- Sidecar Deployment: This means adding a ProxySQL container to the same ECS task that runs our Django containers. So, each Django instance would have its instance of ProxySQL. This technique offers low latency between Django and ProxySQL and eliminates single points of failure.

- Centralised Cluster: We create a cluster of multiple ProxySQL nodes that collaborate to handle traffic. The cluster has a single URL that all our applications can connect to. Each node in the cluster shares the responsibility for serving a portion of the overall traffic.

- Hybrid Deployment: This approach combines sidecar and cluster deployments. Each Django container has its own accompanying ProxySQL container that connects to a centralised ProxySQL cluster, giving us the best of both worlds.

We decided to start with the sidecar approach because of its simplicity. It allows us to explore ProxySQL’s functionalities thoroughly and understand its impact on our systems without making big changes to our infrastructure.

ProxySQL sidecar - Gains and challenges

Integrating ProxySQL as a sidecar brought a mix of enhancements and some practical challenges. Here’s what we observed on production after routing our application’s database traffic through ProxySQL.

Gains:

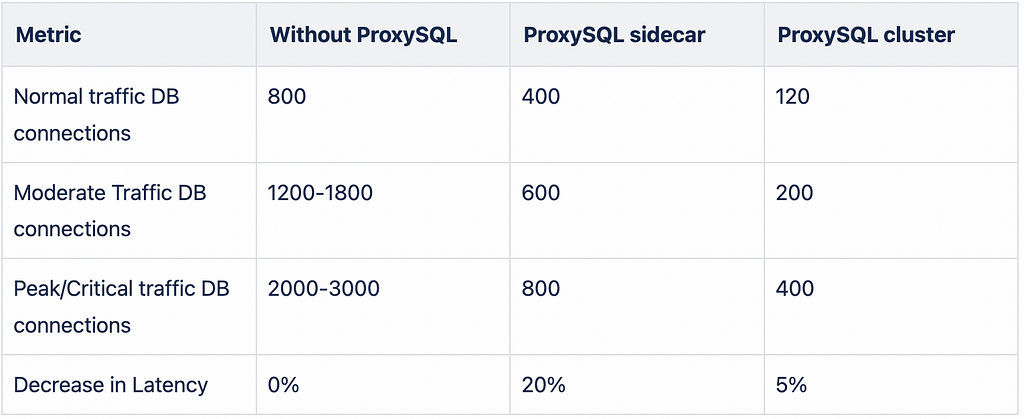

- Faster Response Times: We saw a great 20% improvement in response times, thanks to ProxySQL’s connection pooling.

- Fewer Connections: MySQL connections dropped by 60%, meaning no alerts from our monitoring systems during peak traffic.

- Better Load Distribution: ProxySQL spread our read traffic across multiple read replicas, fixing previous issues with uneven replica usage.

- Resilience: Running each ProxySQL instance as a sidecar reduced the risk of a single point of failure, making our system more robust.

The Challenges:

- Connection Creep with Scaling: Our autoscaling setup increased the number of ProxySQL containers alongside Django containers, causing an increase in connection count and diluting the benefits.

- Administration: Managing configurations for ProxySQL nodes was tricky, and making changes to all containers in real time was a pain.

- Observability: Monitoring and exporting metrics from tens of ProxySQL containers was hard too.

After three months, it was clear that using ProxySQL brought big advantages, but the challenges made us think about switching to a clustered approach.

Moving to the clustered approach

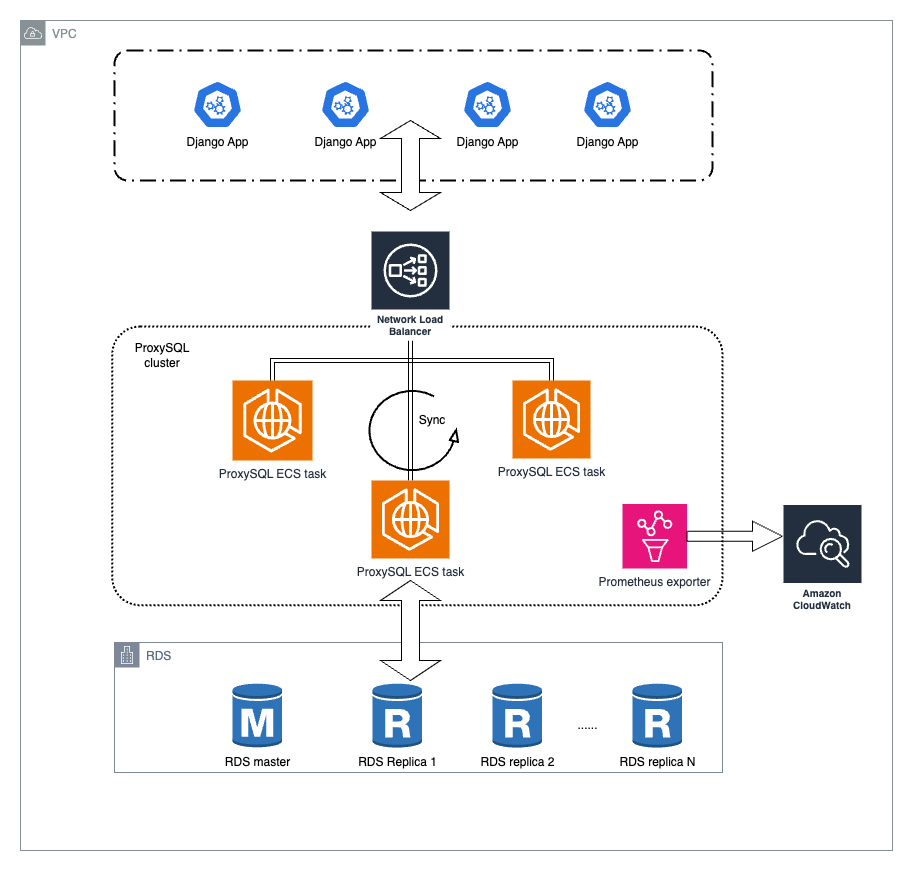

Here’s how we set up a ProxySQL cluster on ECS.

Cluster Configuration on ECS

- ProxySQL cluster ECS service: A simple and effective way to set up a cluster is to use an ECS service with multiple tasks. Each task can act as a separate node in the cluster.

- Network Load Balancer (NLB) Integration: To distribute incoming traffic between all the nodes we used an AWS Network Load Balancer and set the ECS tasks as targets.

- DNS for Easy Access: We wanted a simple URL to connect to ProxySQL, so we created a shorter URL in route53 with a CNAME to the load balancer, Ex: "proxysql.production.internal" so that it's easily accessible from inside our VPC.

Gains:

- Even fewer Connections: We’ve noticed an 80% decrease in connection count since we set up the cluster compared to our pre-ProxySQL numbers.

- Easy to scale: The cluster allows us to scale the ProxySQL nodes independently from our Django application, providing flexibility to adjust resources based on actual demand.

- Less Connection Creep with Autoscaling: Unlike the sidecar setup, the clustered approach doesn’t lead to a proportional increase in connections as our application scales due to traffic, making it more efficient under varying loads. Ex: Even when we saw 4x traffic, the connection count was still 50% of pre-ProxySQL numbers under normal load.

- Easy to manage: Centralised management of the ProxySQL nodes reduces complexity and ensures that all configurations and updates are consistently applied across the cluster.

- Increased Stability: Using the NLB adds a layer of fault tolerance. If a node becomes unhealthy, the NLB automatically redirects traffic to healthy nodes, reducing downtime and enhancing the reliability of our database interactions.

Drawbacks:

- Slight increase in latency: An additional network call is introduced between ProxySQL and the NLB causing an increase in latency.

Despite the minor drawback, this setup proved more reliable because it performed better under peak and critical traffic.

Comparing different deployments

Conclusion

Over the past two years, ProxySQL has been a great tool for managing our database traffic. We haven’t seen an alarm for excessive connections since then. It has also safeguarded our application from incidents like replica crashes. Additionally, it has streamlined our operations by helping with zero-downtime database upgrades and general database management.

Thank you for following our journey with ProxySQL. We truly appreciate your interest and hope that these insights have been helpful. Stay tuned for Part 2, where we will dive deeper into the techniques we used for administering and monitoring a ProxySQL cluster. See you soon!

Overcoming database bottlenecks: A Journey with ProxySQL was originally published in Instawork Engineering on Medium, where people are continuing the conversation by highlighting and responding to this story.