A tale of performance — ECS, boto3 & IAM

| published by | Utkarsh Bansal |

|---|---|

| in blog | Instawork Engineering |

| original entry | A tale of performance — ECS, boto3 & IAM |

A tale of performance — ECS, Boto3 & IAM

While going through NewRelic and looking at some of the worst offenders in terms of performance, we discovered some calls to S3 that were taking over 5000ms❗️

This was alarming as our SLA for API/View response times was 250ms, and these network calls are destroying our KPIs. Through a series of experiments and iterations, we managed to bring the S3 access times down to 7ms, and learned some fascinating things about configuring Boto3 sessions and assigning ECS task roles along the way.

So let’s get to it!

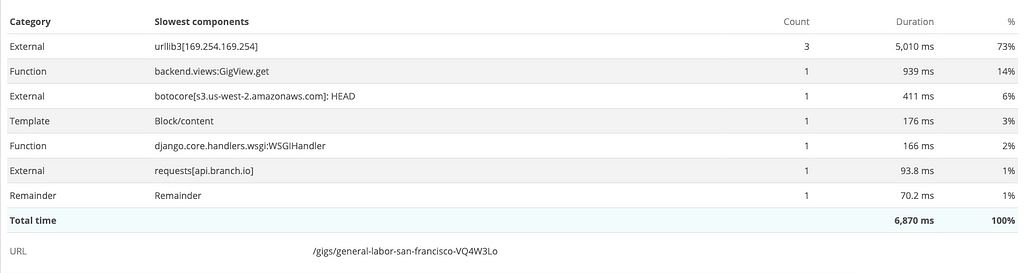

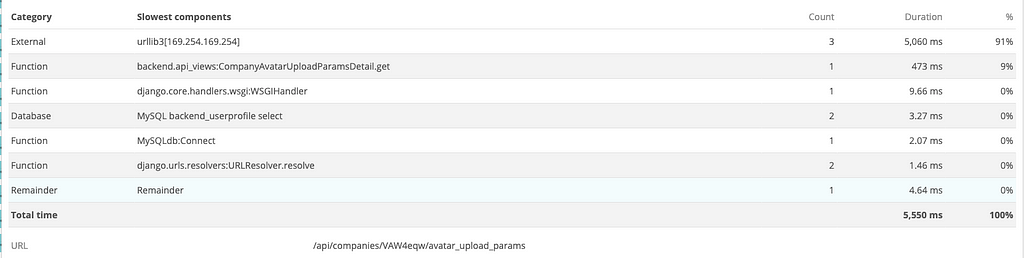

Here are some of the more intriguing traces:

backend.views:GigView.get responded in 6.8 seconds, with 3 network calls to 169.254.169.254 taking up 5000ms and a HEAD request to S3 taking up another 400+ ms. These network calls account for ~80% of the total response time.

backend.api_views:UserAvatarUploadParamsDetail.get responded in 5.5 seconds with 3 network calls to 169.254.169.254 taking up 5000ms. These network calls account for ~90% of the total response time.

Background

How does IAM work?

Before we dig into the calls to 169.254.169.254, we first need to understand how IAM, and specifically, how IAM roles work.

When our application needs to access a specific resource on AWS (think S3), it first needs the required access levels/permissions. There are two commonly used ways to provide this access via IAM.

- Create an IAM user with the permissions needed and pass the access key id and access key secret of this user to the code. This is what we do when we want to access S3 from our local dev machines.

- Create an IAM role and attach the role to the server running the code. This is the recommended method of giving access to entities inside our VPC. It is a bit easier to manage and more secure since we don’t need to pass around keys/secrets ourselves.

What happens when you attach a role to an EC2 server?

Once you have attached a role to the EC2 server, any code running on it can ask for temporary access credentials that allow it to perform any whitelisted actions.

Here are the rough steps to be followed to make a call to S3 using the boto3 library:

- Check if the access_key_id and access_key_secret are passed explicitly to the boto3 call. This will fail because we don’t set these keys.

- Check the ~/.aws/credentials file. This will fail as well because we don’t add this either.

- Use the instance metadata service (IMDS) and call the EC2 metadata endpoint to get temporary credentials. See this for reference. The metadata endpoint is a fixed IP address that is, by default, accessible by all EC2 servers. The IP is 169.254.169.254 — these are the slow network calls we see in our NewRelic traces.

To summarize, the network calls to 169.254.169.254 is our app trying to get credentials to access S3.

Why is the call to 169.254.169.254 so slow?

Given that 169.254.169.254 is an internal AWS IP, a 5000ms response time seems a bit excessive. A related point of interest is that a call to a resource on S3 leads to three API calls. If we just need credentials, shouldn’t a single API call do the job?

As it turns out, there are two versions of IMDS — IMDSv1, which has been around for years now, and IMDSv2, that AWS released sometime around Q4 2019. Although both v1 and v2 are running, newer versions of the AWS SDKs prefer using v2 over v1. Only if calls to v2 fail, the library falls back to v1.

The AWS SDKs use IMDSv2 calls by default. If the IMDSv2 call receives no response, the SDK retries the call and, if still unsuccessful, uses IMDSv1. This can result in a delay. In a container environment, if the hop limit is 1, the IMDSv2 response does not return because going to the container is considered an additional network hop. To avoid the process of falling back to IMDSv1 and the resultant delay, in a container environment we recommend that you set the hop limit to 2.

Reference: AWS: Retreiving the Instance Metadata

It is also interesting to note that this issue had been raised on the AWS SDK for Golang.

go version go1.11.1 linux/amd64

Getting credentials from an ec2 role takes 20 seconds. We have this issue on consistently on multiple different ec2 instances since a couple of days ago.

To me it looks like an issue with the service, but I am not sure.

Reference: Relevant AWS Go SDK bug

This could explain why there are always three calls! Our code is running in Docker containers, and the network hop count will come out to be two.

What are hops, and why are they significant?

To ensure IP packets have a limited lifetime on the network, all IP packets have an 8 bit Time to Live (IPv4) or Hop Limit (IPv6) header field and value which specifies the maximum number of layer three hops (typically routers) that can be traversed on the path to their destination.

Each time the packet arrives at a layer three network device (a Hop), the value is reduced by one before it gets routed onward. When the value eventually reaches one, the packet gets discarded by the device that receives it (as the value would get reduced to zero).

So the docker networking layer will just drop the response from IMDSv2 calls!

Okay, what do we do about this?

Two things can be done here: we can either increase the hop count to 2 so that our containers can use IMDSv2, or we can use ECS task roles.

Earlier, we mentioned a sort of precedence order for how Boto3 will find credentials. That list is not entirely complete! There is another option — ECS task roles. The actual precedence order is

- Command-line options

- Environment variables

- CLI credentials file

- CLI configuration file

- Container credentials (ECS task roles)

- Instance profile credentials

Using ECS task roles is the recommended solution, but let’s just try out the increasing hop count method.

Here is a stripped-down version of the core code of UserAvatarUploadParamsDetail — the part that gets the pre-signed URL

def test_presigned_url():

session = boto3.session.Session()

s3_client = session.client("s3")

response = s3_client.generate_presigned_url(

'get_object',

Params={

'Bucket': "my-personal-bucket",

'Key': "utkarsasdh.png"

},

ExpiresIn=3600

)

print(response)

Let’s run this in a production container and time the function call using %time test_presigned_url():

CPU times: user 42.6 ms, sys: 8.62 ms, total: 51.2 ms

Wall time: 5.06 s

Makes sense — this matches the data we see on NewRelic.

We now increase the hop count using the following command.

aws ec2 modify-instance-metadata-options --instance-id i-XXXXXXXXXXXX --http-put-response-hop-limit 3

Let’s re-run %time test_presigned_url():

CPU times: user 41 ms, sys: 12.5 ms, total: 53.5 ms

Wall time: 55.3 ms

Wall time varies from 50–60 ms. Awesome! We are down from 5000ms to 50ms!

This confirms our hypothesis.

Hang on, there’s more!

50ms is decent, but why does every single request need to get credentials every time? Can’t we have longer living credentials and just cache them?

Let’s take a look at the code again. We are creating a session with this line session = boto3.session.Session(). What is a session? See documentation here. This session class is responsible for figuring out authentication. Let's try to remove it and run the example code in the boto3 docs.

def test_presigned_url():

# session = boto3.session.Session()

# s3_client = session.client("s3")

s3_client = boto3.client('s3')

response = s3_client.generate_presigned_url(

'get_object',

Params={

'Bucket': "my-personal-bucket",

'Key': "utkarsasdh.png"

},

ExpiresIn=3600

)

Let’s time this code %time test_presigned_url()

CPU times: user 41 ms, sys: 12.5 ms, total: 53.5 ms

Wall time: 55.3 ms

Now run it a second time and see the output

CPU times: user 7.29 ms, sys: 0 ns, total: 7.29 ms

Wall time: 6.96 ms

This is awesome(er)! We are down from 50ms to 7ms

Okay, so what is happening here? How did it get faster? By default, Boto3 will use a default session that you don’t have to worry about. The first time this default session is used, Boto3 will get the credentials for you and cache them.

Final Approach

- Reuse the default Boto3 session as much as possible.

- Attach roles directly to ECS tasks — use the same role as the EC2 servers’ ecsInstanceRole. This should give us the same performance benefits as increasing the hop value.

TL;DR

Our code was slow because calls to IMDSv2 are failing. Fixing this got the S3 access times down to 50 ms (100x speedup). By reusing the default Boto3 session instead of creating a new one every time we need to access S3, we further reduced the S3 access times to <10ms (500x speedup).

A tale of performance — ECS, boto3 & IAM was originally published in Instawork Engineering on Medium, where people are continuing the conversation by highlighting and responding to this story.